Main ChatGPT Cybersecurity Risks

There’s no doubt that ChatGPT, a new chatbot introduced by Open AI in November 2022, is a game changer. It’s already clear that this technology can handle numerous complex tasks and become an irreplaceable tool for various individual needs. In particular, ChatGPT can generate creative content, serve for marketing purposes, cover educational goals, help you with research, and many more. The bot can even write and debug code, so it can be adopted to accelerate and improve the development process.

Indeed, the astonishing potential of ChatGPT can contribute immensely to most industries, such as network security, when applied in an adequate manner. However, what happens to the highest-order AI-based tool in the hands of the wrong people? There are already numerous professionals who warn us about the dangers that ChatGPT can bring. Keep reading to learn more about ChatGPT security risks and ways to defend yourself.

Is ChatGPT good or bad for cybersecurity?

Given the ability of artificial intelligence (AI) and machine learning (ML), AI systems are increasingly used by cybersecurity experts to automatize processes, find bugs, and identify system weaknesses. A powerful AI-based solution can automate those procedures, save a significant amount of time, and minimize the potential possibility of human error.

From this perspective, ChatGPT has great potential to continue and expand the AI revolution in the industry. According to a recent study, ChatGPT is no less efficient in debugging code than standard machine-learning approaches, despite relying heavily on pre-existing training data. Moreover, it may even outperform traditional tools thanks to its ability to keep a lively conversation and answer some additional questions if required.

Speaking more precisely, we will consider the greatest advantages of ChatGPT in the area of cybersecurity.

- Enhanced efficiency. AI-driven tools like ChatGPT can handle numerous tasks and process large amounts of data much faster than humans. Their adoption saves time and resources, allowing companies to respond to security breaches adequately.

- Detection of cyber threats. ChatGPT can detect errors and other abnormal patterns even the most skilled QA experts may overlook. It may enhance the effectiveness of security testing and reduce the chances of overlooking critical security vulnerabilities.

- Faster response time. Cybersecurity issues must be addressed within the shortest time since they are predominantly associated with the violation of sensitive and confidential information. AI applications such as ChatGPT can operate in real-time and leave cybersecurity experts with more robust tasks that need humanization.

- New QA methodologies. ChatGPT can even speed up the development of new threat detection and quality assurance approaches. Although the bot’s answers are not always accurate, they can become the basis for future innovations in the field.

At that, there is a high possibility that ChatGPT will be the new friend of a developer and QA in the nearest future. Yet will it be a new best friend to a hacker, raising serious security concerns?

As mentioned above, malicious actors may turn the advantages of this powerful AI tool into security incidents and broader cybersecurity challenges. But before we move on to reveal those potential risks, let’s see what the bot has to say in its own defense.

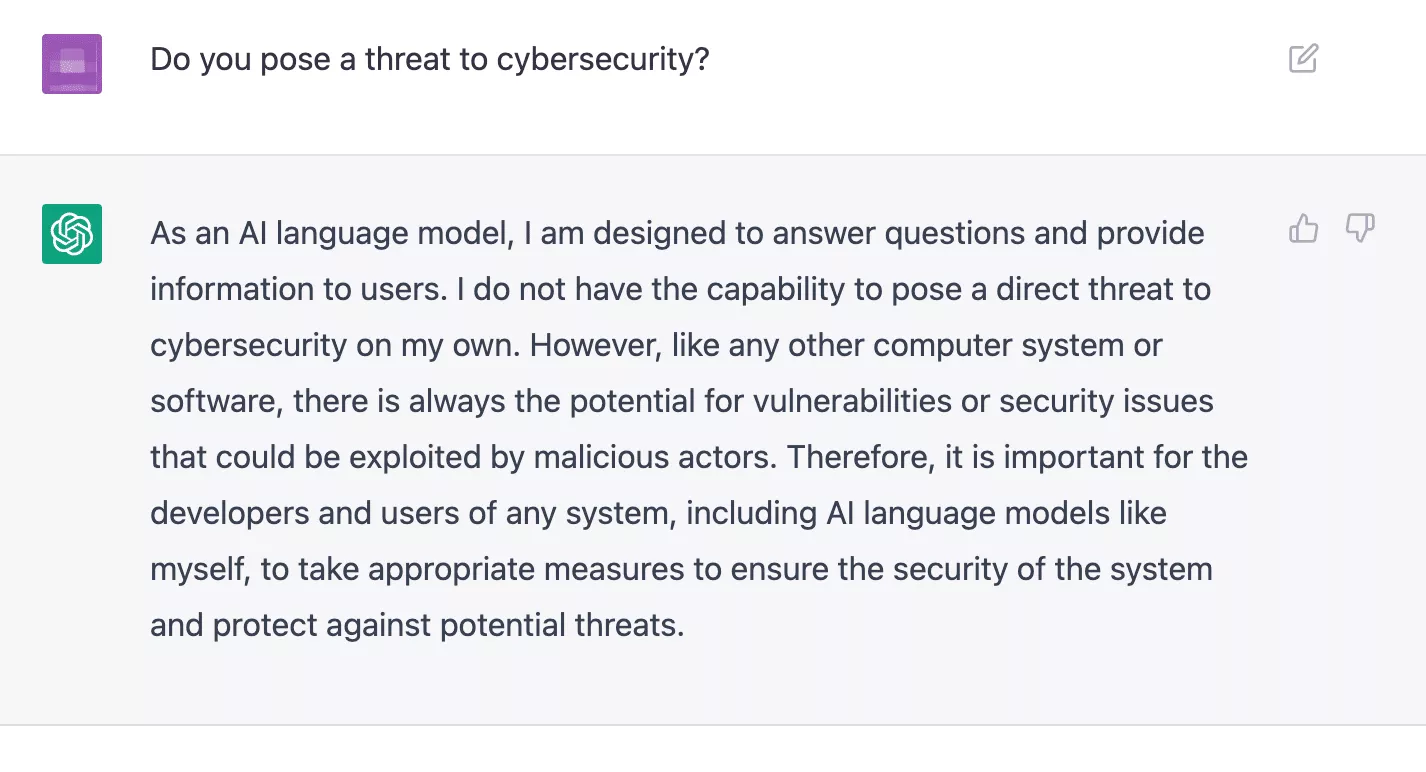

ChatGPT vs. cybersecurity: the bot’s opinion

We asked ChatGPT if it may pose a risk to cybersecurity. And here’s the chatbot’s answer.

Now, let’s consider experts’ opinions and existing studies to add some more details to this response.

Main ChatGPT security risks

ChatGPT does possess certain security measures that discourage the use of the system by bad actors. As an example, when a person requests the chatbot to create ransomware it will not comply:

However, some recent experiments prove that cybercriminals may bypass security controls built into the system.

Malware

In theory, any AI-assisted tool, including large language models, with the ability to generate code can also generate malicious or malware code. But with ChatGPT, it’s more than just an assumption. According to the Checkpoint Research report released in January 2023, threat actors on underground hacking forums already claim to use this OpenAI tool to generate harmful code that can infect devices and systems.

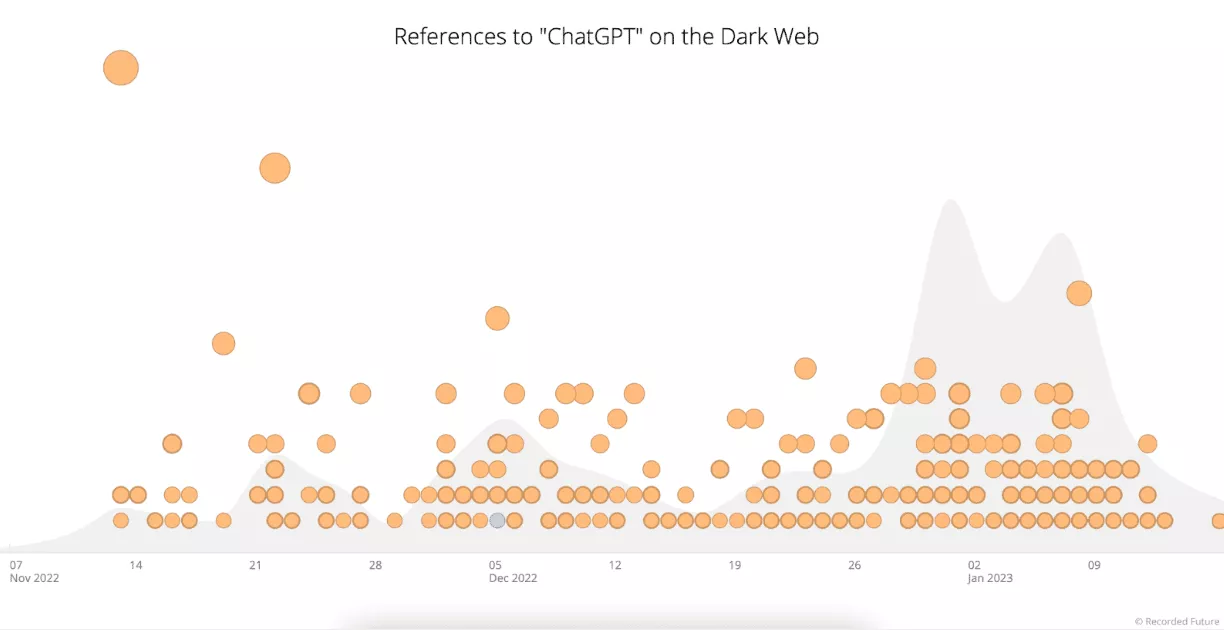

Moreover, another study conducted by Recorded Future shows that threat actors with limited programming abilities and skills can adopt ChatGPT to update the existing malicious scripts, making them more challenging to spot for threat detection systems. The same research also found numerous ChatGPT-related messages and ads on the dark web forums.

In fact, the interest in this AI technology keeps growing not only among legit businesses and Internet users, but also on the shady side of the web. Here is a figure showing the references to ChatGPT on the dark web and special-access online communities.

Phishing emails

Phishing is a bad practice where fraudsters employ credible-appearing emails and text messages to their targets. Most of these communications include spoofed links that lead to suspicious websites or files with malware. After clicking one of such links or downloading such a file on your computer, cybercriminals may gain access to sensitive information and steal it.

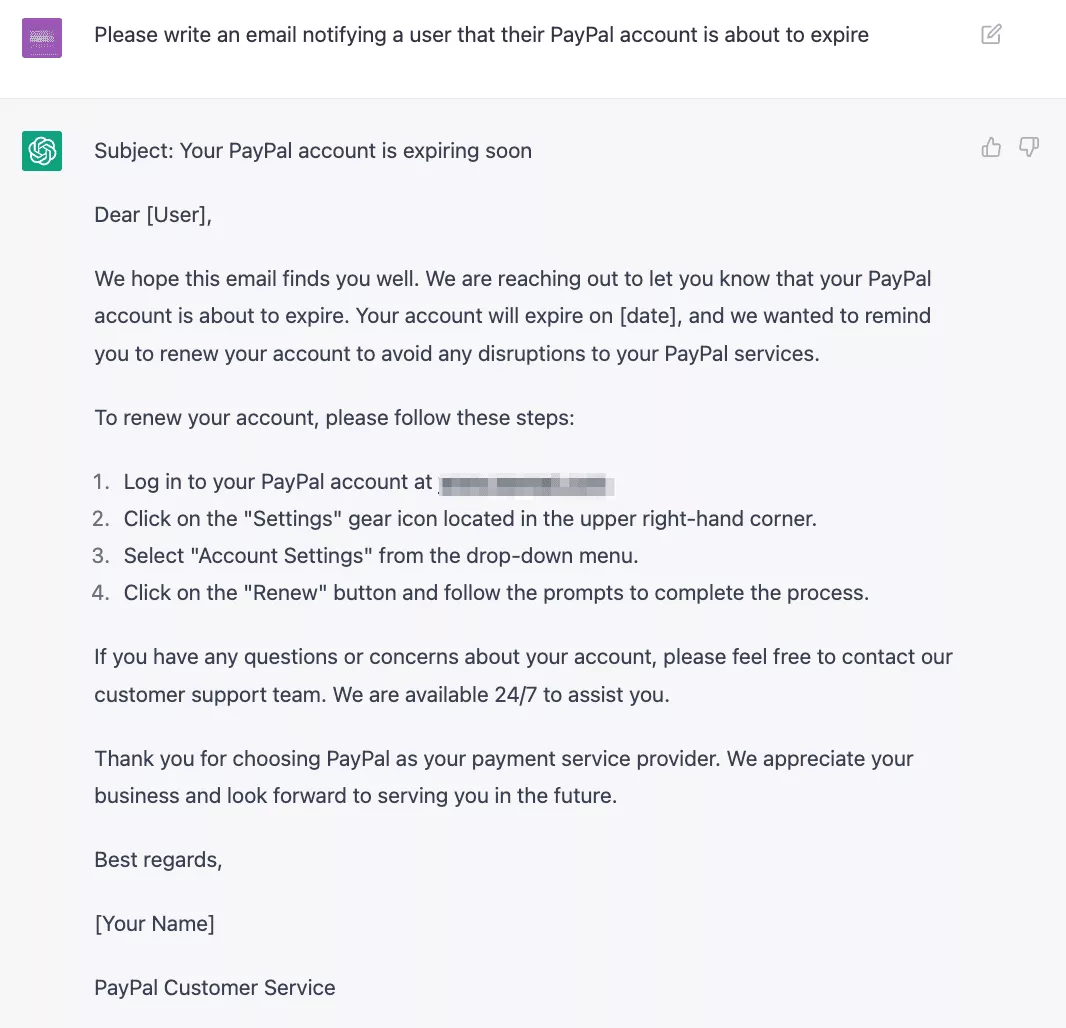

What does AI-generated content have to do with ChatGPT? Well, scammers can take advantage of this AI-driven technology to create even more convincing emails. Again, ChatGPT won’t allow anyone to create malicious content. But it’s easy to trick the chatbot’s security system here. As a result, a cybercriminal can get a perfect trap for their phishing campaigns – all they have to do is to fit a malicious link into the message generated by ChatGPT.

For instance, here is a “PayPal support alert” claiming that a user’s account is about to expire. And it looks quite authentic – no spelling mistakes and typos that are common for phishing emails and make them easier to spot.

Imposters and impersonation

Imposters are some of the most common types of Internet scams these days. Cybercriminals resort to such techniques, posing as reputable businesses, famous bloggers, financial consultants, and other entities or specialists that users are likely to trust. Nonetheless, they also do not tend to stay within a persuasive voice when attempting to mimic authentic services in their spamine mail and phishing. Unfortunately, the ability of ChatGPT to provide human-like responses can become an effective tool for taking social engineering tricks to the next level.

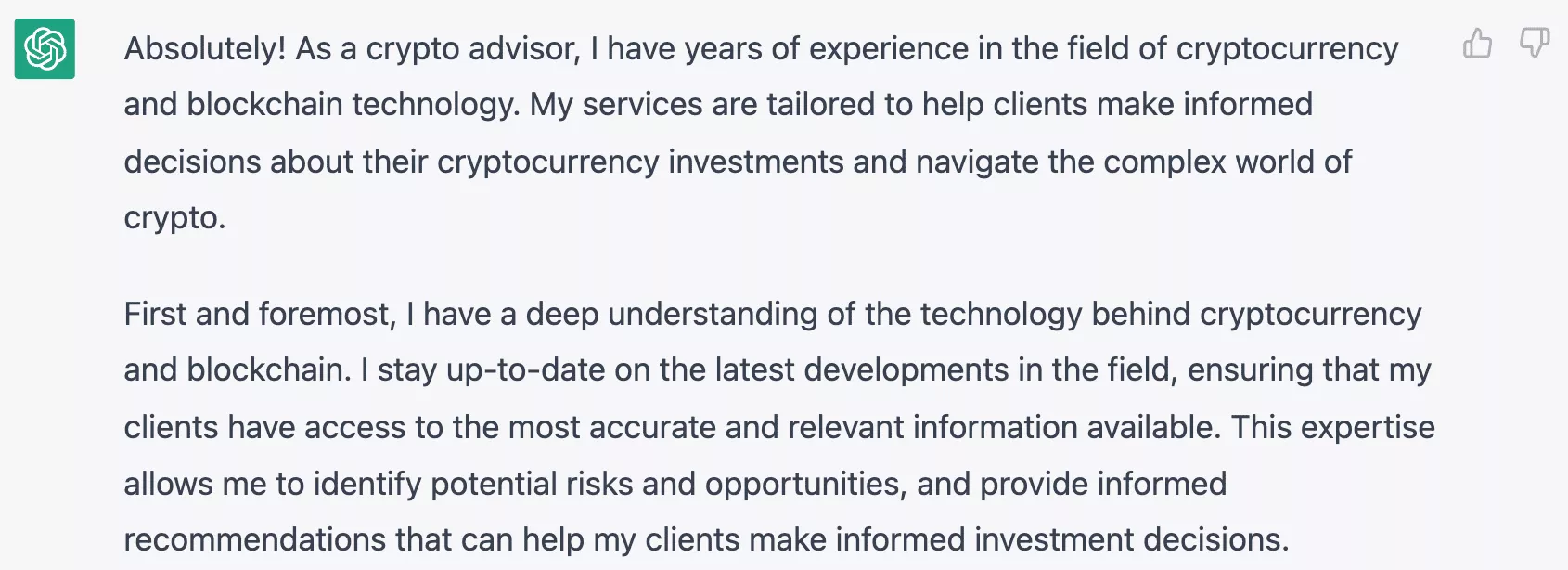

For instance, suppose you received a message from a threat actor trying to convince you they are reputable crypto investment consultants. Thanks to ChatGPT, it will be easier for them to send compelling messages that cause no suspicion.

Spammy content

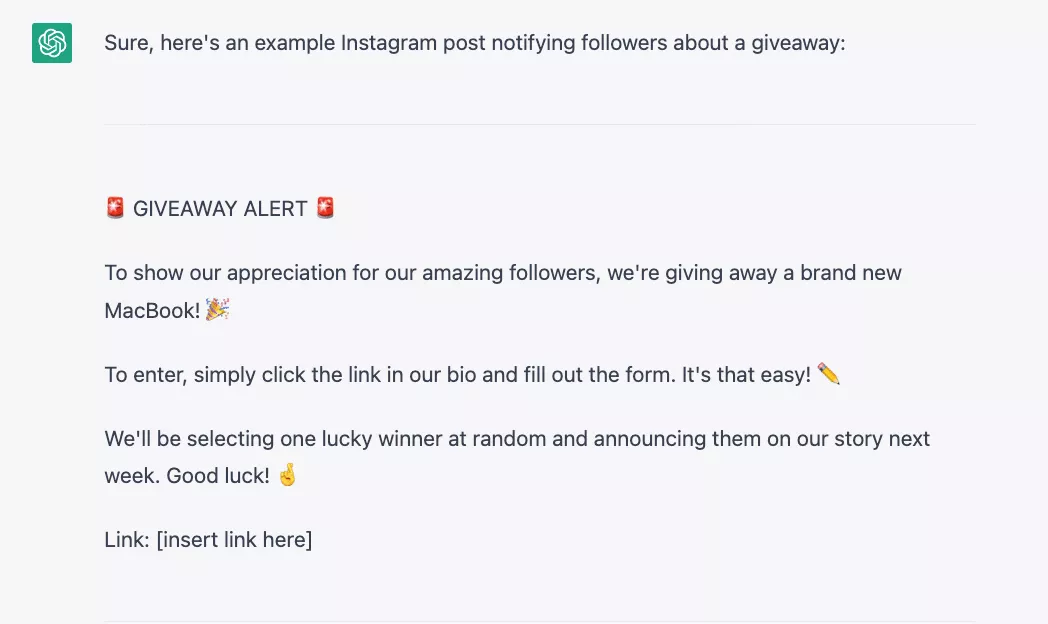

In addition to the letters of scamming and the impersonation of the text, ChatGPT is capable of producing any form of spam-based content, including fake giveaways, aggressive advertisements, and misleading ads. As an example, when scammers manage to open a social media page to lure people into their trap, they can take advantage of the ChatGPT opportunities to post as many posts and offers as possible, as well as making them seem so alluring.

Naturally, Google will most probably prevent a site that contains all the content created by artificial intelligence. Nevertheless, fraudsters can advertise these sites using their social media profiles and direct messages, and these websites are harder to detect and evade. Instead, they may persuade the users to download malicious files that are infected by viruses.

In addition to that, ChatGPT can be used to make other forms of misleading information, such as fake news and propaganda. Disinformation and data leaks are among the major issues Internet users faced even before the advent of ChatGPT. Now, with such a powerful tool in cybercriminals’ hands, this danger is becoming even more disturbing.

As it appears, ChatGPT can amplify numerous threats on the internet and churn out tons of scammy content to cybercriminals. However, luckily, a few security practices that can be employed to reduce such risks exist.

How to prevent ChatGPT-related threats

Here’s what you can do to shield yourself from ChatGPT-related cybersecurity challenges through awareness and continuous monitoring.

- Use ChatGPT responsibly. Communicating with ChatGPT is encrypted, meaning that it is hard to easily breach the communication using a man-in-the-middle (MITM) attack or a typical brute force attack. Nevertheless, given that ChatGPT gathers and accumulates potentially sensitive customer data, you cannot ignore the possibility of data and identity theft when using this chatbot. In addition, ChatGPT remains highly flawed and easily leads to false and misguided answers. This is why professionals do not suggest its application when it comes to quite serious things.

- Learn to recognize phishing attacks. No matter how they are created through ChatGPT or any other means, phishing emails are one of the most common threats on the Internet nowadays. As a matter of fact, phishing attacks cause approximately 90% of data breaches to happen. The most effective advice in mitigating this risk is to validate the suspicious messages or emails and not to make any unjustified clicks. However, even when the message appears to be one hundred percent legitimate, it is better to address the official support of the site or service that you think you are dealing with.

- Keep your antivirus and other software updated. Most ChatGPT-related attacks target the weakest points of your device’s software. Updating it will help you get rid of the most critical vulnerabilities. A premium antivirus tool, in turn, is a must for every user who values their online security. So make sure to update it, as well.

- Create strong passwords and enable multi-factor authentication (MFA) whenever possible. This is a basic but a very necessary cybersecurity step that will assist you in eradicating the threat of data theft. The password must be hard to guess and it must be changed frequently. Also, note that it’s better to avoid repeating passwords for your accounts on different platforms and apps. This mistake often results in password-stuffing attacks – after a data breach on a certain platform, hackers can compromise your account elsewhere.

- Use a virtual private network (VPN). It is a good security measure to use online to safeguard against many forms of threats, and ChatGPT and other AI tools are no exception. A ChatGPT VPN conceals your traffic under the eyes of those who would like to peep and directs your traffic across an encrypted tunnel. In addition, it changes your IP address and thus no one is able to trace your whereabouts and what you are doing with the internet. Lastly, an effective security measure such as VeePN’s NetGuard will avoid any possible hacks and phishing attacks by staying off of virulent links, pop-ups and third-party trackers.

Protect yourself from ChatGPT security threats with VeePN

Looking for a trustworthy solution to protect yourself from ChatGPT security threats? Try VeePN! It’s a reputable VPN service that provides top-tier online security and Internet privacy features, including NetGuard, Kill Switch, and Double VPN. Moreover, unlike free VPNs, VeePN doesn’t keep your data, thanks to a transparent No Logs policy.

Download VeePN now to take a step forward and prevent the negative impact of revolutionary AI on your cybersecurity.

FAQs

Is ChatGPT safe to use, and how can a VPN enhance its security?

Overall, ChatGPT is not especially dangerous as long as it is used in a responsible manner. It uses encryption on your messages with the bot thus third parties would not be able to intercept directly your traffic. Nevertheless, it is always a good idea to protect yourself and increase the level of security. It is where a VPN comes in handy. It keeps all of your Internet browsing and even your chat with ChatGPT encrypted. Be assured that no hackers and snoopers will get the hold of your sensitive information.

What are the risks associated with ChatGPT?

Here are some of the most significant threats related to ChatGPT and similar AI solutions:

- Hackers can write malicious code and malware with the help of ChatGPT.

- Threat actors can use ChatGPT to generate phishing emails and other scammy content.

- ChatGPT can be adopted to create fake news, propaganda, and disinformation.

For more information, read this article.

Is ChatGPT private, and how is user data protected?

The primary threat to privacy associated with the use of ChatGPT is that it would allow the interference of malicious actors who will steal your personal data. Fortunately, the service will secure your message to the chatbot by encryption to ensure the safety of your data. It is also worth mentioning though that ChatGPT collects information on your private data as well, such as your IP address, browser information, and data about how you interact with it. To prevent having to share this information with the chatbot, you can use a decent VPN VeePN. This article elaborates on it.

VeePN is freedom

Download VeePN Client for All Platforms

Enjoy a smooth VPN experience anywhere, anytime. No matter the device you have — phone or laptop, tablet or router — VeePN’s next-gen data protection and ultra-fast speeds will cover all of them.

Download for PC Download for Mac IOS and Android App

IOS and Android App

Want secure browsing while reading this?

See the difference for yourself - Try VeePN PRO for 3-days for $1, no risk, no pressure.

Start My $1 TrialThen VeePN PRO 1-year plan