AI-Driven Cyberattacks: Techniques, Real Cases, and Where You’re Most at Risk

10 years ago, you probably would think an artificial intelligence-driven security incident could happen only in a Sci-fi or unrealistic future. But the situation has changed. In 2024, Statista’s survey found that half of cyber leaders became most worried that generative AI will improve cyber attacks like phishing, malware, and deepfake attacks. And not without reason!

In this article, you’ll find real-life cases of artificial intelligence cyberattacks and deepfakes for AI phishing and other modern fraud types and see how it impacts people and organizations of every scale. You’ll also learn some effective safety strategies to follow and how VeePN can help to protect online privacy from advanced con artists’ instruments.

Why AI-driven cyberattacks are evolving so quickly

AI attacks constitute a brand-new hacking method that stands beyond traditional hacking techniques. In other words, AI cybersecurity threats are a completely new level of advanced threats. The process of crafting emails and guessing passwords has become outdated for attackers as they now use self-learning software and ML algorithms to perform their malicious activities. Here are the biggest challenges associated with modern AI cyber-attack methods:

Speed and volume

The operational speed of machine learning tools is extremely fast. Thousands of phishing attempts and vulnerability scans become possible in mere seconds. It means that more users are likely to become victims of automated AI phishing attacks, and different platforms’ security that have millions of users may turn out to be less solid.

High-level customization

Attackers generate exceptional AI ransomware deepfakes with the help of artificial intelligence and its ability to collect information from social media and public databases, along with voice messages. Victims may not even tell the difference, whether it’s their real friend in need or it is an artificially-made deepfake video.

Adaptability

When users set defense measures like antivirus to their devices, cunning AI malware can mutate its code to evade detection and do its harm, staying completely unnoticed.

Having that outlined, let’s consider relevant techniques cybercriminals use and specific cases that illustrate how it works in real life.

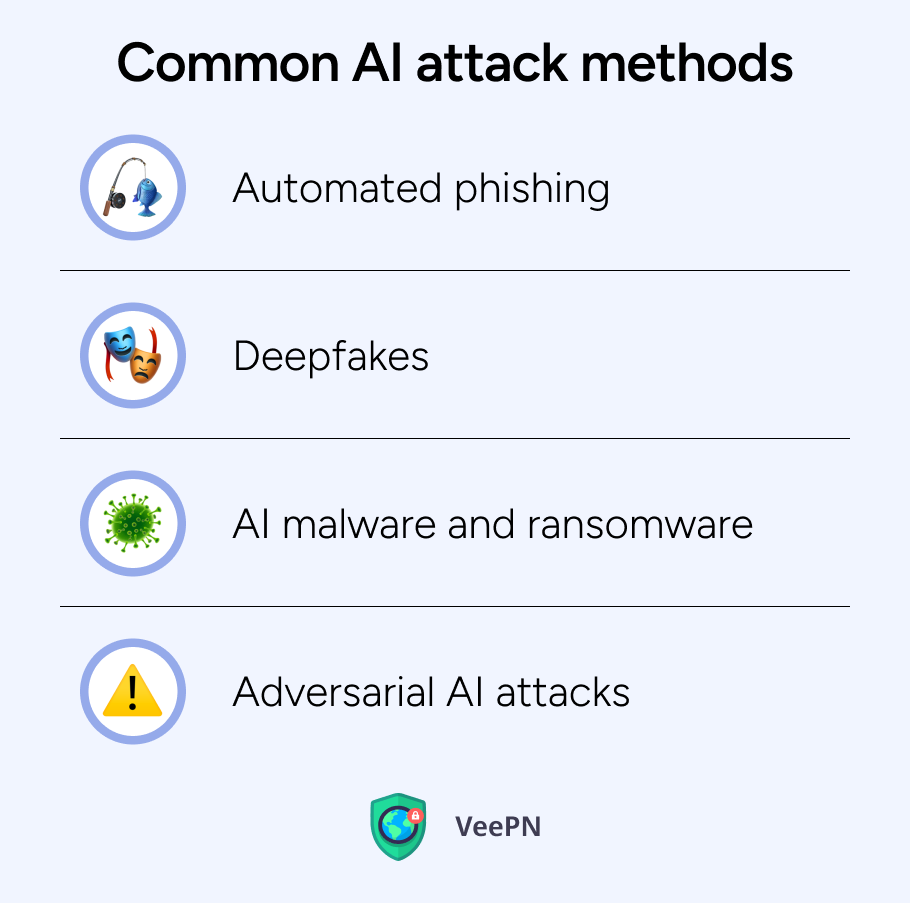

Techniques and cases of AI cyber attacks

Below are the most common types of AI-powered attacks and prominent situations that illustrate their application:

1. Automated phishing

Attackers analyze users’ social media and other digital footprints to generate realistic personal messages and emails that look like real ones. With artificial intelligence, they can generate deceiving content that persuades victims to reveal their confidential information.

Cybersecurity professionals warn users of Gmail, Outlook and Apple about an AI-driven email fraud threat. Modern AI software widely uses analytics in social media to build personalized messages that realistically impersonate real contacts. What’s bad in this case is that standard scam identification methods simply can’t detect such messages, and it’s almost impossible to identify whether the message is sent from a real person or is an AI-driven scam.

2. Deepfakes

AI algorithms allow fraudsters to create digital duplicates of communication styles, voices and faces of real people and mimic their behavioral characteristics in audio, video, and text files. These attacks can also be very challenging to detect.

Using deepfakes, scammers created an artificial clone of Arup’s CFO during a video scam call. Then, a finance team member became a victim of this deceptive trick and sent $25 million to fraudsters after the Zoom call with a deepfake-created boss.

Another news reported by the UN in 2023 tells that Southeast Asian cybercriminals stole $37 billion with the help of online scams and deepfakes. The number of AI-powered crimes with deepfakes increased by 600% in 2024, and governments from Myanmar, Cambodia, and Laos in particular just couldn’t stop them.

3. AI malware and ransomware

Traditional malware has fixed code, but AI-generated malware can “learn” how to bypass antivirus tools. The result? Viruses can self-replicate and change their patterns to evade detection by antivirus software running on devices.

One hacking group tied to North Korea runs smart multi-step attacks targeting South Korea for spying and money theft. In a recent attack, they used Dropbox and Google Drive platforms to hide their activity. They started an attack with a phishing email that made victims click an infected link. That link automatically downloaded code which installed a spy program. They also used AES encryption to hide each stage of the attack. As long as they used common cloud services and encrypted files, their malware looked like normal network traffic. But once malicious code is in, threat actors could see everything on the infected computer.

4. Adversarial AI attacks

AI agents like Google Assistant, Claude AI, Notion AI, and other tools companies and standalone users employ to ease their lives can turn out to become big cyberattack threats. If scammers target an adversarial AI attack, the technology is likely to be able to autonomously identify targets, hijack systems, and steal users’ data.

Though this AI cybercrime type is not yet widespread, Dmitrii Volkov, a research lead at AI research organization Palisade Research, says, “In the next few years, I expect to see autonomous hacking agents being told: ‘This is your target. Go and hack it.”

Palisade Research company experts say they have an idea of the kind of threats AI agents will pose to cybersecurity. To detect them, they built LLM Agent Honeypot, where they collected vulnerable servers that pretend to be sites for valuable government and military information.

Now that we have covered the key AI-driven techniques cybercriminals use to deceive people, let’s have a look at what impact AI-driven cyberattacks bring.

Outcomes of AI-powered cyberattacks

Unfortunately, AI cyber threats don’t just show how clever criminals can be. Usually, they cause real damage. Let’s see what AI cybercrime victims can face:

Financial loss

Deepfake scams are becoming a fast route to disaster. In 2024, an 82-year-old retiree, Steve Beauchamp, completely drained his retirement account and “invested” $690,000 after seeing a deepfake video of Elon Musk promoting an investment opportunity. The video scammers made using AI tools to alter Musk’s voice and mouth movements looked convincingly real, and all the money vanished to fraudsters.

Damage to reputation

With so many deepfakes out there, where is the guarantee you won’t be mimicked by cybercriminals? Deepfakes can show people “saying” or “doing” God-knows-what things that would never happen in real life. The consequences might be frustrating: legal problems, job losses or damaged reputation.

Privacy breaches

Current AI technology allows hackers to gather and analyze immense amounts of users’ data in seconds. With AI, they can promptly compile addresses, phone numbers, past purchases info, and other information and then use this data to harm you. How?

Imagine that after gathering info about you, scammers know you are a client of, let’s say, Geek Squad. They can send you scam emails with fake invoices and leave a link so that you can click it to cancel unwanted subscriptions. But once you do it, they can automatically install AI malware on your device to steal your sensitive information. Or you desperately call the number provided in the email to cancel the so-called “subscription invoice” and reveal to them your credit card details.

Stealing credentials

AI-driven automated hacking bots can carry out brute force attacks on your login credentials. So, if you reuse one password for many websites and platforms, an AI script can crack them and even try to log into your bank or e-commerce accounts.

Overall, AI-powered cybersecurity threats are much larger in scope and have worse consequences than previous “analog” approaches fraudsters used. Let’s specify the main areas of danger.

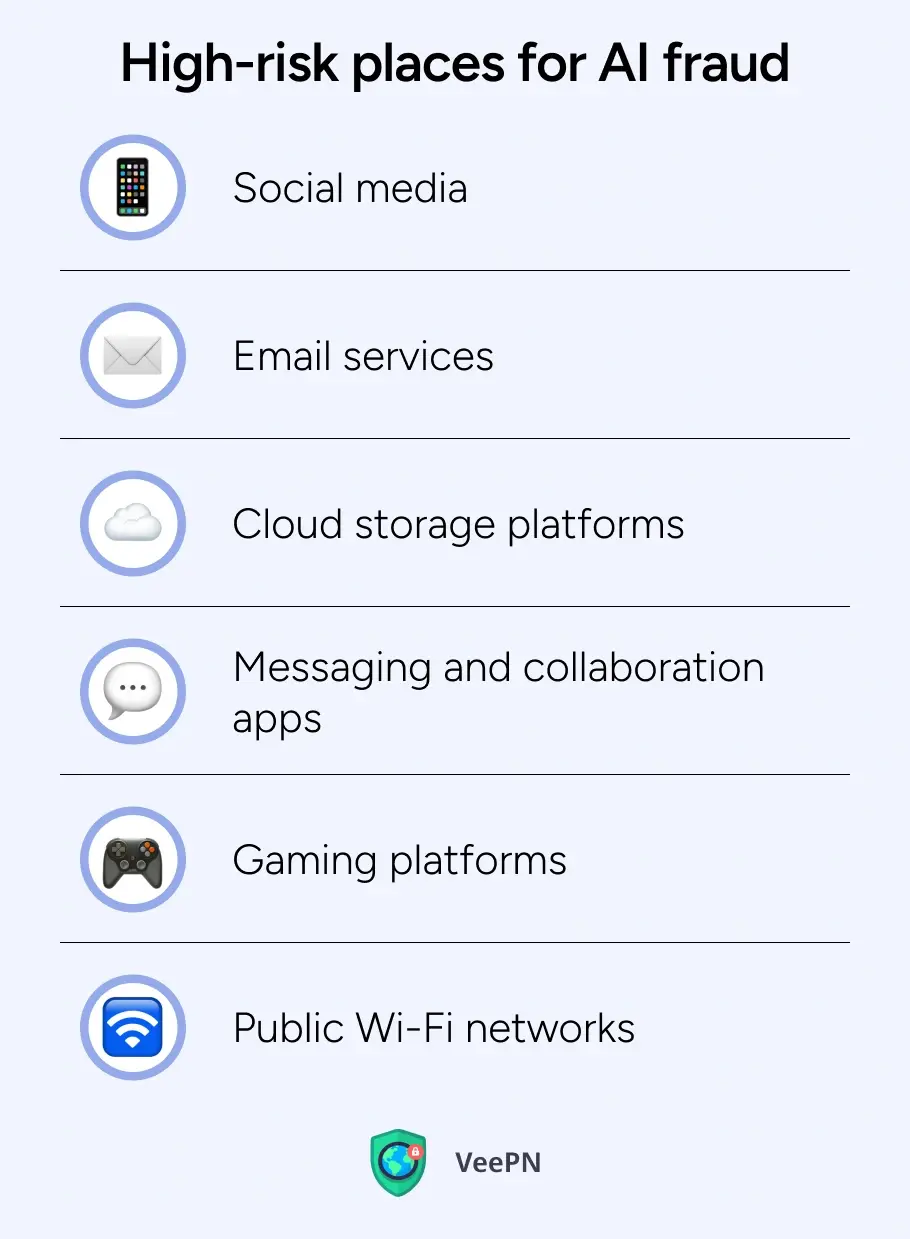

Where you’re most at risk to AI fraud threats

The general characteristic of AI-powered cyberattacks is that cybercriminals often go where there’s a lot of data to steal and many people to fool. So beware when you are on:

Social media

AI-generated posts you may come across on Instagram, Facebook, or YouTube can spread false narratives, manipulate public opinion, or trick you into believing sensational or hurtful news or worse, fall into get-rich-quick schemes. Scammers usually launch social engineering attacks by posing as someone you trust and tricking you into clicking malicious links or disclosing personal or financial information.

Email services

AI-driven phishing attacks are not rare for Gmail, Outlook, and other users of email services. Some fraudulent emails may look so legit that they can slip through spam filters and users will get infected emails in their inbox. This way, you may get letters impersonating your bank, service provider, or even a co-worker from your team. You miss something, click the infected link, and scammers may get your sensitive data on a silver platter.

Cloud storage platforms

Your Google Drive, Dropbox, OneDrive, or other cloud services could become a sweet target for scammers, as people often hold tons of various docs, images, contracts, and all kinds of sensitive personal data there. Attackers may use AI-powered tools to find weak access permissions, open ports and exposed APIs, not changed default creds or other misconfigurations. What for? If they find it, they can steal data or inject an AI-generated malware that will spread across all your synced devices to compromise your data even more.

Messaging and collaboration apps

Viber, Microsoft Teams, and even WhatsApp are not safe places anymore. Cybercriminals use AI to generate fake messages that impersonate your coworkers’ tone and writing style. The reason behind this is to make you click infected links or share internal info. Scammers are trying to reach out to you using these platforms because they are usually the places where users don’t double-check links before clicking them as you would likely do in, let’s say, email.

Gaming platforms

Online gamers often use chats for communication with teammates. That’s where scammers show up. They use artificial intelligence to scan gaming community forums and use the same slang and community lingo to send people links with malware masked as tempting links for getting free items, in-game currency, or upgrading accounts.

Public Wi-Fi networks

When you are at a coffee shop or an airport, using free Wi-Fi may sound attractive. But guess what? You can become a victim of AI-based attacks to monitor network communications to intercept your login credentials and credit card data. In an unsecured hotspot, you’re always in danger.

Having specified the places of increased danger, let’s cover ways to protect yourself from AI-driven threats.

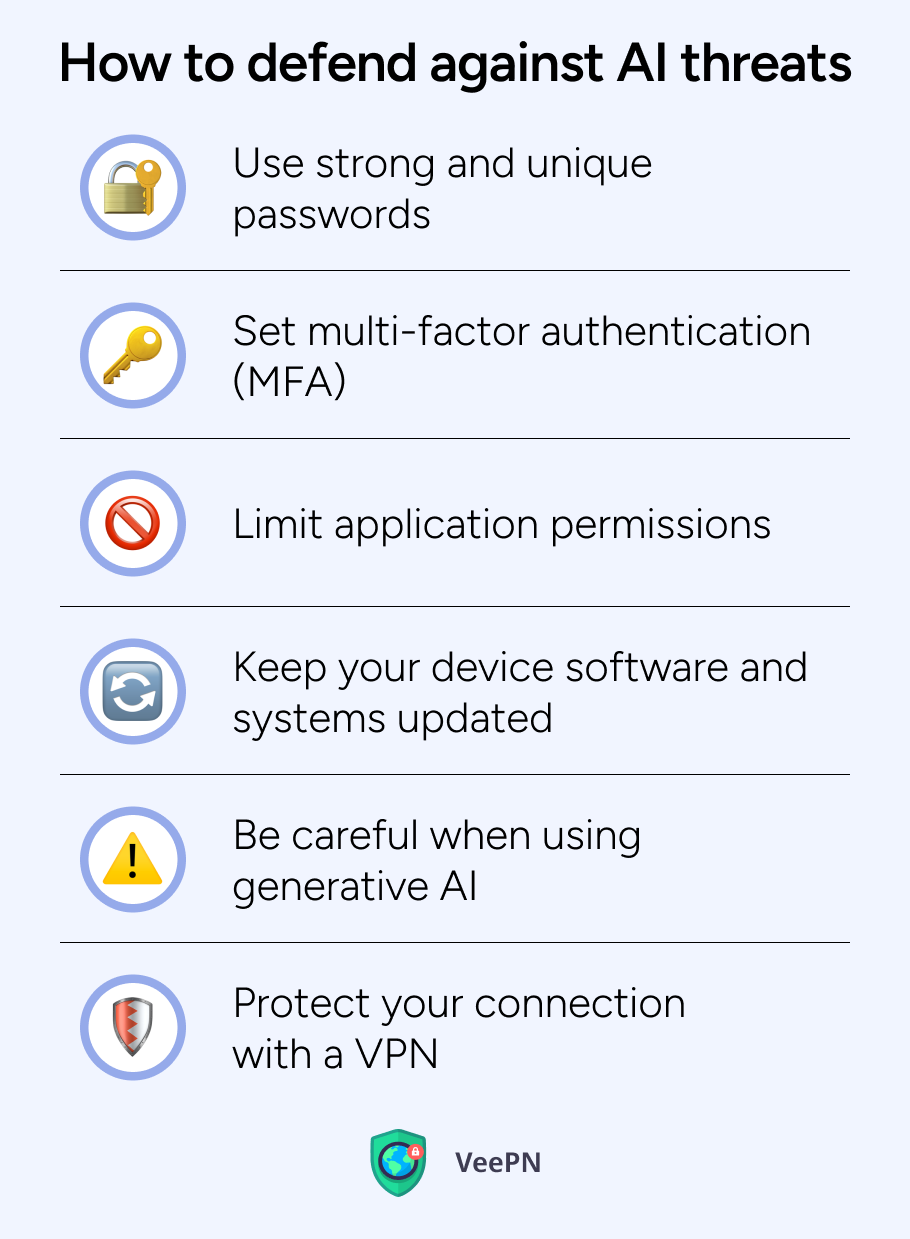

Steps to protect yourself from AI threats

Take up the following steps to safeguard yourself in case you become a target of AI-based cyber attacks:

Use strong and unique passwords

Brute force attackers easily access your accounts whenever you use the same password multiple times for different platforms. Even if one platform is exposed in an AI-powered cyberattack and your password becomes known to con artists, you automatically risk your privacy on any other platform where you use the same password. A good solution here is to keep a password manager that can generate and store strong and random passwords for each of your accounts.

Set multi-factor authentication (MFA)

As sad as it may sound, even strong passwords alone are not a sufficient protection against innovative generative AI attacks. True, if you add MFA, you will have to spend more time logging into platforms and services with two protective measures, like receiving a code, phone call, or fingerprint verification. But this way, even if your credentials somehow fall into the wrong hands, there’s far less chance your accounts will be compromised.

Limit application permissions

Before you install an app or extension on your device, check the permissions it requires. Does the platform you want to use really need to see your geolocation or read your contacts to run services? If you allow apps to know too much, then in case you accidentally install an AI-driven malware, fraudsters will know more info than they could and can exploit it against you. Therefore, if an app demands you to reveal too much data, better steer clear.

Keep your device software and systems updated

One of the main purposes of software updates is to eliminate security vulnerabilities in the previous system version. Old bugs might present weak spots that criminals nimbly take advantage of. Consider downloading and installing new patches once they are available to strengthen your digital security.

Be careful when using generative AI

Yes, chatbots like ChatGPT and diverse AI platforms are helpful, but there are also privacy risks. So, before using and sharing any personal or business details with AI, consider its security risks beforehand.

Protect your connection with a VPN

A virtual private network (VPN) encrypts all your traffic so that hackers using AI systems won’t be able to intercept and trace your actions, while hiding your IP address won’t reveal your whereabouts and online habits to con artists.

There are many VPN solutions available. The charming word “free” may look attractive, but in reality, they’re not as good as they seem. Free VPNs often sell users’ personal information and browsing logs to third parties. Many of them offer weak encryption protocols, bombard you with ads, and just slow down network speeds. Therefore, consider using a reliable provider like VeePN.

Get strong protection from AI-driven cyber attacks with VeePN

These are the protection measures against AI cyber threats that VeePN offers:

Military-grade encryption

VeePN protects whatever you do online with AES-256, the most powerful encryption standard to date. Even if cybercriminals attempt to use advanced sniffing tools for intercepting your Wi-Fi traffic, they will see only indistinctive gibberish instead of your real data.

IP address masking

AI algorithms personalize modern phishing attacks through analysis of users’ behavior and geolocation monitoring to launch targeted phishing attempts. VeePN allows concealing your real IP address by choosing from 2,500+ servers in 89 locations to ensure your anonymity and prevent location-based profiling or tracking.

NetGuard to stop AI malware

VeePN’s NetGuard feature won’t allow you to browse dangerous domains. It automatically blocks website tracking and harmful scripts. The feature is also good for dealing with AI-generated malware that mimics legitimate websites.

Built-in antivirus

With this feature, AI malware will not be able to work and harm your Windows and Android devices. VeePN’s antivirus scans your downloads, stops suspicious behavior and blocks self-replicating or code-evolving AI threats.

Breach Alert

VeePN searches and analyzes stolen databases on the dark web to find out whether your data was exposed. If it finds your emails or passwords there, it immediately sends instant alerts so that you can change your credentials and protect yourself as soon as possible.

Kill Switch for leak protection

In case your VPN connection glitches, VeePN’s Kill Switch automatically cuts your Internet access to prevent your unprotected data from leaking.

Up to 10 devices coverage

Your one VeePN account allows you to secure up to 10 devices and use them with a VPN connection turned on, be it your phone, laptop, smart TV, or router.

Try using VeePN without risk, enjoying our 30-day money-back guarantee.

FAQ

Cybercriminals use AI tools to generate realistic deepfakes, hijack data, and run phishing attacks that manipulate human behavior.

Cybercriminals distribute fake or misleading information through their tactics to obtain victims’ personal information. Such AI-driven attacks may bypass the device’s threat detection tools like antivirus. Learn more types and how to keep yourself safe in this article.

VeePN is freedom

Download VeePN Client for All Platforms

Enjoy a smooth VPN experience anywhere, anytime. No matter the device you have — phone or laptop, tablet or router — VeePN’s next-gen data protection and ultra-fast speeds will cover all of them.

Download for PC Download for Mac IOS and Android App

IOS and Android App

Want secure browsing while reading this?

See the difference for yourself - Try VeePN PRO for 3-days for $1, no risk, no pressure.

Start My $1 TrialThen VeePN PRO 1-year plan